Local LLM with ollama

Installing and Using Ollama - A Guide for Local LLMs

11.06.2025

Installing and Using Ollama: A Guide for Local LLMs

Introduction

This post is about how to run a local Large Language Model (LLM) on your own PC. If you have a fairly modern computer, it should generally work well. You'll find the hardware requirements in the next section.

Why should you set up a local LLM?

- You don't need an internet connection when using the LLM

- You can be sure that your own data isn't being used to train an LLM. This is important if you're processing or analyzing confidential data with the LLM.

- You can use and experiment with different open-source LLMs and evaluate them.

- You can use it for private coding assistants

- You can use it for private RAG (Retrieval-Augmented Generation) systems

These are just some of the many possible applications for local LLMs.

Now, let's get to the main topic: How do I set up a local LLM? For this, I want to use the tool ollama, which is easy to install and use. I should mention that this is a CLI (Command Line Interface) application. Furthermore, ollama can also provide a REST API, which you can then use with your own apps or run other open-source applications through it.

Hardware Requirements

I've been using an Apple device for many years, so I'll only make statements for a Mac.

- Operating System: macOS 11 Big Sur or newer

- Processor: A processor with Apple Silicon is recommended, although there are reports that it also works on an Intel processor (Source: Reddit).

- RAM:

- At least 8 GB RAM for 7B models

- 16 GB RAM for 13B models

- 32 GB RAM for larger models like 33B

- Storage: At least 50 GB of free storage space is recommended, as the models themselves can be several gigabytes in size.

- GPU: On Macs with Apple Silicon (M1/M2/M3 chips), the integrated GPU is used.

- For optimal performance, especially with larger models, it's recommended to:

- Use newer Mac models with Apple Silicon (M1/M2/M3)

- Provide as much RAM as possible

- Ensure sufficient storage space for the models and generated data

Installation on Mac

- Open your browser and go to the official Ollama website.

- Download the Mac version of Ollama.

- Open the downloaded file and follow the installation instructions.

- After installation, open the Terminal.

- Enter "ollama run llama3.1" to load and start the Llama LLM version 3.1. You can find more info about the model here: llama3.1

Using Ollama

After installation, you can use Ollama as follows:

- Open the Terminal on your Mac.

- To load and start a model, enter:

ollama run llama2

The first time you run this command, it may take a while as the model needs to be downloaded first. Depending on the model size and your internet speed, it can take shorter or longer. Be patient 😊

When the model download is complete, you should see something like this in your Terminal:

pulling manifest

pulling 8eeb52dfb3bb... 100% ▕████████████████████████████████████████████████████████████████████████████████████▏ 4.7 GB

pulling 948af2743fc7... 100% ▕████████████████████████████████████████████████████████████████████████████████████▏ 1.5 KB

pulling 0ba8f0e314b4... 100% ▕████████████████████████████████████████████████████████████████████████████████████▏ 12 KB

pulling 56bb8bd477a5... 100% ▕████████████████████████████████████████████████████████████████████████████████████▏ 96 B

pulling 1a4c3c319823... 100% ▕████████████████████████████████████████████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

success

>>> Send a message (/? for help)

Now you can chat with the model. For example, you could ask:

>>> What are the 4 seasons? Explain it to me as if I were a 5-year-old

Additional Functions of ollama

Displaying Local Models

To display the local models, you can use this command:

$ ollama list

NAME ID SIZE MODIFIED

llama3.1:latest 42182419e950 4.7 GB 8 minutes ago

Since we have a fresh installation of ollama and have only downloaded one model, we only see one entry.

Information on Local Models

To get more information about a local model, you can use this command:

$ ollama show llama3.1

Model

parameters 8.0B

quantization Q4_0

arch llama

context length 131072

embedding length 4096

Parameters

stop "<|start_header_id|>"

stop "<|end_header_id|>"

stop "<|eot_id|>"

License

LLAMA 3.1 COMMUNITY LICENSE AGREEMENT

Llama 3.1 Version Release Date: July 23, 2024

In this example, we see that this is an 8B (Billion) parameter model and that it's based on the llama architecture... as the name already suggests 😄.

But this is how you can view the necessary info and check if it fits your use case.

Downloading Additional Models

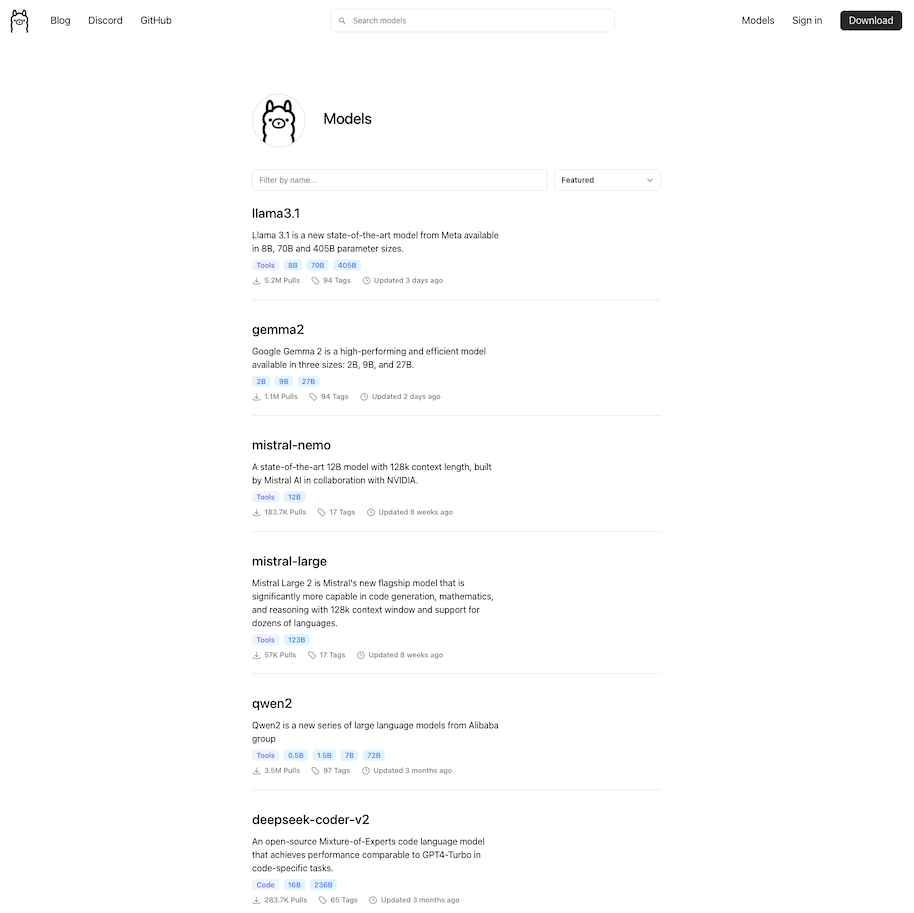

Ollama doesn't support all models available in the LLM open-source community. An overview of the models available in ollama can be found in the Ollama Library.

In the screenshot, you can see a small part of the available models. To download a model now, you run this command:

In the screenshot, you can see a small part of the available models. To download a model now, you run this command:

$ ollama pull gemma2

In this example, I'm downloading Google's gemma2 model. If you want to specify a selection of parameters, you do it like this. Information on what the command should look like to get the right parameter size can be found here:

$ ollama pull gemma2:2b

Providing an API

If you want to do more than just chat via the CLI, and want other applications to access the LLM, an API needs to be provided. You can achieve this with this command:

$ ollama serve

Usually, the API starts automatically when you start the desktop app. If the ollama desktop app is already running, you'll see this message:

$ Error: listen tcp 127.0.0.1:11434: bind: address already in use

Despite this error message, you can send a CURL request to check the functionality. For example, this one:

curl http://localhost:11434/api/generate -d '{

"model": "llama3.1",

"prompt": "What are the 4 seasons? Explain it to me as if I were a 5-year-old",

"stream": false

}'

And the result should look like this:

{

"model":"llama3.1",

"created_at":"2024-09-18T18:52:35.80851Z",

"response":"The 4 seasons are:\n\n1. **Spring**: Spring is a beautiful time! The sun gets stronger, and it gets warmer and warmer. The trees and bushes get green leaves, and the flowers start to bloom. There are many beautiful colors and smells in nature!\n2. **Summer**: In summer, it's often very warm and sunny! You can enjoy playing in the sand or by the water. The days are long, and you have lots of time to have fun. It's a great time for picnics, playground visits, and many other joys!\n3. **Autumn**: In autumn, it gets colder and rougher again. The leaves on the trees start to fall, and nature gets a bit of color in gold, orange, and red. It's a beautiful time for walks and for collecting nuts or fallen apples!\n4. **Winter**: In winter, it's often very cold! The sun isn't as strong, and you should dress warmly when you're outside. There's a lot of snow and ice, and many children enjoy sledding! It's also a good time for indoor games and activities.\n\nEach season has its own beauty and opportunities for playing and relaxing!",

"done":true,

"done_reason":"stop",

"context":[

128006,882,128007,271,27125,12868,2815,220,19,98848,3059,15010,30,9939,10784,47786,8822,6754,10942,69673,10864,4466,220,20,503,38056,7420,128009,128006,78191,128007,271,18674,220,19,98848,3059,15010,12868,1473,16,13,3146,23376,22284,2785,96618,13031,2939,22284,2785,6127,10021,92996,29931,0,8574,12103,818,15165,27348,357,14304,7197,11,2073,1560,15165,26612,289,14304,1195,13,8574,426,2357,3972,2073,69657,82,1557,75775,2895,16461,2067,14360,466,11,2073,2815,2563,28999,3240,12778,6529,1529,2448,12301,13,9419,28398,43083,92996,13759,8123,2073,20524,2448,1557,304,2761,40549,4999,17,13,3146,50982,1195,96618,2417,80609,6127,1560,43146,26574,8369,2073,4538,77,343,0,2418,16095,9267,55164,737,8847,12666,1097,74894,15756,76,17912,13,8574,72277,12868,8859,11,2073,893,9072,37177,29931,11,9267,6529,22229,36605,13,9419,6127,10021,39674,273,29931,7328,20305,77,5908,11,32480,58648,7826,288,34927,2073,43083,34036,7730,61227,4999,18,13,3146,21364,25604,96618,2417,6385,25604,15165,1560,27348,597,30902,466,2073,436,28196,13,8574,2563,14360,466,459,3453,426,2357,28999,3240,12778,6529,21536,11,2073,2815,40549,94177,2562,4466,293,1056,7674,13759,1395,304,7573,11,22725,2073,28460,13,9419,6127,10021,92996,29931,7328,3165,1394,1291,70,77241,2073,7328,82,8388,76,17912,6675,452,1892,12,12666,5345,59367,370,69,33351,268,4999,19,13,3146,67288,96618,2417,20704,6127,1560,43146,26574,597,3223,0,8574,12103,818,6127,8969,779,38246,11,2073,893,52026,9267,8369,459,13846,12301,11,22850,2781,45196,84,27922,6127,13,9419,28398,37177,5124,34191,2073,97980,11,2073,43083,45099,83857,27922,3453,50379,23257,98022,0,9419,6127,11168,10021,63802,29931,7328,64368,6354,20898,273,2073,7730,553,275,74707,65421,2002,382,41,15686,98848,30513,9072,35849,35834,1994,5124,24233,23190,2073,67561,52807,16419,20332,8564,2073,4968,1508,12778,0

],

"total_duration":10083803708,

"load_duration":31196166,

"prompt_eval_count":33,

"prompt_eval_duration":283579000,

"eval_count":328,

"eval_duration":9767887000

}

As we can see, we get much more information via the API than through the chat. If we now want to calculate the speed (tokens per second), we would need to do the following calculation:

eval_count / eval_duration * 10^9

eval_countis the size of the response in tokens. A token is usually more than one letter.eval_durationelapsed time in nanoseconds

This results in a speed of 33.57 tokens per second.

Further information about the ollama REST API can be found on the ollama Github Project.

Summary

In this tutorial, you've seen how to set up and use ollama on your Mac. I've introduced the simple chat via the command line and how you can access the API. Additionally, you've learned about a few elementary functions of ollama.

Now it's your turn, set up your local LLM and start chatting away!